Google is in possession of vast amounts of Earth imagery via its Street View product, and now it is testing machine learning as a way to harvest ‘professional-level photographs’ from that imagery. As detailed in a newly-published study, Google researchers created an experimental deep-learning system that trawls Street View landscapes in search of high-quality compositions.

Machine learning, while capable at tasks that involve ‘well defined goals,’ struggles in the face of subjective concepts such as determining whether a photograph has high aesthetic value. Google developed this latest deep-learning system as a way to explore how artificial intelligence can learn subjective concepts such as photography—training the system using professional photographs taken by humans.

Compositions identified by the AI from the Street View imagery were then automatically improved using an editing tool called ‘dramatic mask’ that enhances an image’s lighting.

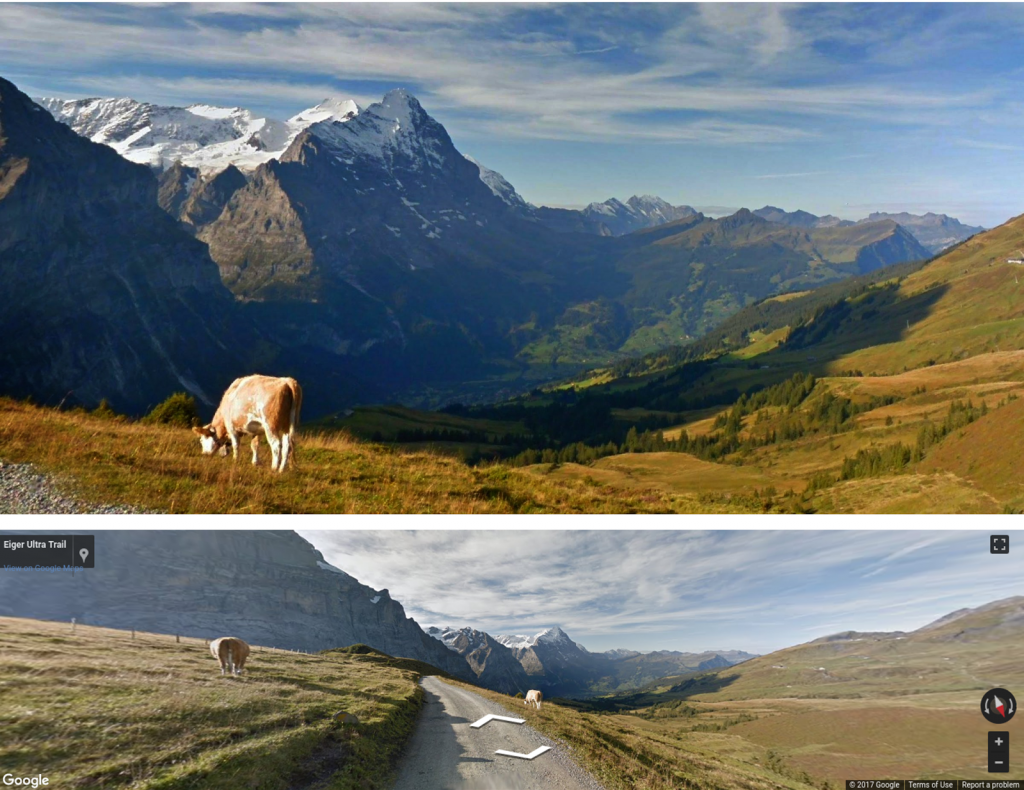

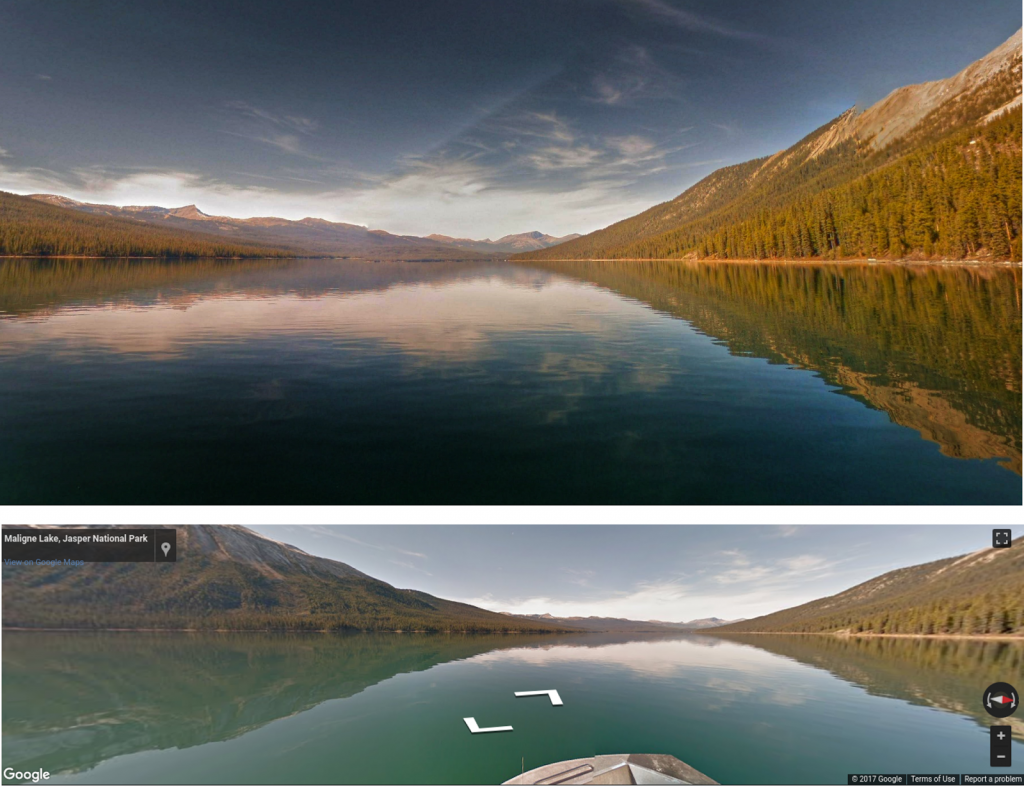

Below are some examples from the Google Research Blog:

“Using our system, we mimic the workflow of a landscape photographer,” the researchers explain. “From framing for the best composition to carrying out various post-processing operations.”

To test the quality of its AI-generated photos, Google asked professional photographers to blindly rate a collection of photos from various sources, including its Street View photos. The team’s conclusion from those ratings is that, “a portion of our robot’s creation can be confused with professional work.”